Transforming Grammars¶

1. Transforming Grammars¶

We use grammars to represent a programming language. Want to know: Is a given string (or program \(x\)) valid (syntactically correct)? Same as asking if it is in the language.

Last time we showed that if we could transform a CFG into a CFG with no \(\lambda\)-productions, and no rules like \(A \rightarrow B\), then we could determine if \(w\) is in or not in \(L(G)\) in \(2|w|\) rounds, each step adding a terminal or increasing in length. (Linz 5.2)

This works, but it is not fast, that is, not linear!

We will look at lots of methods for transforming grammars. Some will be forms that are easier to work with, some are easier to use in proofs.

Note

Ask yourself: What does it mean "without restricting the power of CFGs"?

Specifically, we will look at restrictions on the right hand side of the production rules. We want to be able to automatically transform an arbitrary CFG into an equivalent restricted CFG.

We will consider CFL without \(\lambda\). It would be easy to add \(\lambda\) to any grammar by adding a new start symbol \(S_0\),

\(S_0 \rightarrow S \mid \lambda\)

Substitution Theorem Let \(G\) be a CFG. Suppose \(G\) contains

\(A \rightarrow x_1Bx_2\)

where \(x_i \in (V \cup T)^{*}\), \(A\) and \(B\) are different variables, and \(B\) has the productions

\(B \rightarrow y_1|y_2|\ldots|y_n\).

Then, we can construct \(G'\) from \(G\) by deleting

\(A \rightarrow x_1Bx_2\)

from \(P\) and adding to it

\(A \rightarrow x_1y_1x_2|x_1y_2x_2|\ldots | x_1y_nx_2\).

Then, \(L(G) = L(G')\).

Question: Why don't we also delete \(B\) rules?

Answer: These might be used by another production

Question: What if \(A\) and \(B\) are the same?

Then the B productions become useless productions.

Definition: A production of the form \(A \rightarrow Ax\), \(A \in V, x \in (V \cup T)^*\) is left recursive.

Example: Previous expression grammar was left recursive. (from Chapter 5 notes)

It is left recursive because it has SOME left recursive productions. Not all of the productions are left recursive.

\(E \rightarrow E+T \mid T\)\(T \rightarrow T*F \mid F\)\(F \rightarrow I \mid (E)\)\(I \rightarrow a \mid b\)

A top-down parser (like LL parsing) would want to derive the leftmost terminal as soon as possible. But in the left recursive grammar above, in order to derive a sentential form that has the leftmost terminal, we have to derive a sentential form that has other terminals in it.

Derivation of \(a+b+a+a\) is:

NOTE: nicer to have a grammar that would derive the \(a\) first without all the other +'s.

We will eliminate the left recursion so that we can derive a sentential form with the leftmost terminal and no other terminals.

Theorem (Removing Left recursion) Let \(G = (V,T,S,P)\) be a CFG. Divide productions for variable \(A\) into left-recursive and non left-recursive productions:

\(A \rightarrow Ax_1 \mid Ax_2 \mid \ldots \mid Ax_n\)\(A \rightarrow y_1 \mid y_2 \mid \ldots \mid y_m\)

where \(x_i\), \(y_i\) are in \((V \cup T)^*\).

Any derivation will start with \(y_i\).

\(A\) is not a prefix of any of the second type.

Then \(G = (V \cup \{Z\}, T, S, P')\) and \(P'\) replaces rules of form above by

\(A \rightarrow y_i \mid y_iZ, i = 1, 2, \ldots, m\)\(Z \rightarrow x_i \mid x_iZ, i = 1, 2, \ldots, n\)

Note

Consider a derivation: \(y_3x_7x_1x_3\)

Example 1

When you get rid of left-recursion, the grammar is in the appropriate form for a top-down parser, but the grammar has more variables and productions.

Now, the derivation of \(a+b+a+a\) is:

\(E \Rightarrow TZ \Rightarrow FZ \Rightarrow IZ \Rightarrow aZ\)

Didn't have to look at any other terminals yet!

Useless productions

\(S \rightarrow aB \mid bA\)\(A \rightarrow aA\)\(B \rightarrow Sa\)\(C \rightarrow cBc \mid a\)

What can you say about this grammar?

\(A\), \(S\), and \(B\) are useless variables since they can't derive a string of terminals. \(C\) is useless because you can't get \(S \stackrel{*}{\Rightarrow} xCy \stackrel{*}{\Rightarrow} w\), where \(w \in T^*\).

Theorem: (useless productions) Let \(G\) be a CFG. Then \(\exists\ G'\) that does not contain any useless variables or productions such that \(L(G) = L(G')\).

To Remove Useless Productions:

Let \(G = (V,T,S,P)\).

Then \(G_1 = (V_1, T, S, P_1)\) has no variables that can't derive strings.

Now we need to get rid of productions we can't use.

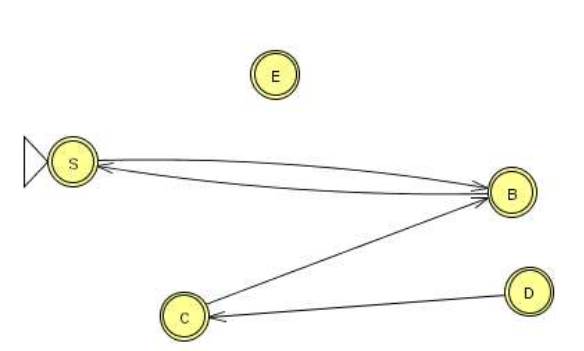

- Draw Variable Dependency Graph

For \(A \rightarrow xBy\), draw \(A \rightarrow B\).

Draw \(A\) in a circle, \(B\) in a circle, and an arc from \(A\) to \(B\).

Remove productions for \(V\) if there is no path from \(S\) to \(V\) in the dependency graph. Resulting Grammar \(G'\) is such that \(L(G) = L(G')\) and \(G'\) has no useless productions.

Example 2

\(V_1 = \{B, C, D, E, S\}\), \(A\) is useless.

Dependency graph:

Q: How would you implement II? How do you know which nodes are accessible from S? Use DFS or BFS.

NOTE: Last time talked about simpler CFG that had no \(\lambda\)-productions, now we will show how to get rid of them.

Theorem (remove \(\lambda\) productions) Let \(G\) be a CFG with \(\lambda\) not in \(L(G)\). Then \(\exists\) a CFG \(G'\) having no \(\lambda\)-productions such that \(L(G) = L(G')\).

To Remove \(\lambda\) -productions

Example 3

\(V_n = \{B, C, A\}\)

NOTE: Don't add \(A \rightarrow \lambda\)!

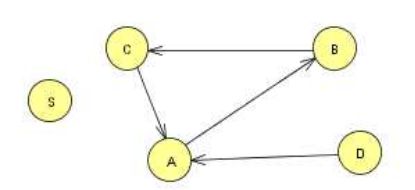

Definition: Unit Production

\(A \rightarrow B\)

where \(A, B \in V\).

Consider removing unit productions:

Suppose we have

\[\begin{split}\begin{array}{lll} A \rightarrow B & \ \ \ \ \ \mbox{becomes} \ \ \ \ \ & A \rightarrow a \mid ab \\ B \rightarrow a \mid ab \\ \end{array}\end{split}\]

But what if we have

\[\begin{split}\begin{array}{lll} A \rightarrow B & \ \ \ \ \ \mbox{becomes} \ \ \ \ \ & A \rightarrow C \\ B \rightarrow C & & B \rightarrow A \\ C \rightarrow A & & C \rightarrow B \\ \end{array}\end{split}\]But we don't get rid of unit-productions!

Theorem (Remove unit productions) Let \(G = (V, T, S, P)\) be a CFG without \(\lambda\)-productions. Then \(\exists\) CFG \(G = (V', T', S, P')\) that does not have any unit-productions and \(L(G) = L(G')\).

To Remove Unit Productions:

1. Find for each \(A\), all \(B\) such that \(A \stackrel{*}{\Rightarrow} B\)(Draw a dependency graph howing relationship of Unit productions. Just draw arc for each \(A \rightarrow B\) rule.Draw \(A\) in a circle, \(B\) in a circle, and an arc from \(A\) to \(B\).)2. Construct \(G' = (V', T', S, P')\) by(a) Put all non-unit productions in \(P'\)(b) For all \(A \stackrel{*}{\Rightarrow} B\) such that \(B \rightarrow y_1 \mid y_2 \mid \ldots y_n \in P'\), put \(A \rightarrow y_1 \mid y_2 \mid \ldots y_n \in P'\)Run DFS with \(A\) as root.Note the star in \(A \stackrel{*}{\Rightarrow} B\)Never put a unit production in \(P'\).

Example 4

Theorem: Let \(L\) be a CFL that does not contain \(\lambda\). Then \(\exists\) a CFG for \(L\) that does not have any useless productions, \(\lambda\)-productions, or unit-productions.

Proof:

1. Remove \(\lambda\)-productions2. Remove unit-productions3. Remove useless productions

Note order is very important. Removing \(\lambda\)-productions can create unit-productions! QED.

There are additional examples in the book.

Definition: A CFG is in Chomsky Normal Form (CNF) if all productions are of the form

\(A \rightarrow BC \ \ or A \rightarrow a\)

where \(A, B, C \in V\) and \(a \in T\).

Why would you want to put a grammar in this form? Because it is easier to work with in proofs.

Theorem 1 :

Any CFG \(G\) with \(\lambda\) not in \(L(G)\) has an equivalent grammar in CNF.

Proof:

Example 5

NOTE: Can get rid of \(\lambda\)-productions and unit productions first!

Definition: A CFG is in Greibach normal form (GNF) if all productions have the form

\(A \rightarrow ax\)

where \(a \in T\) and \(x \in V^*\)

This is like an s-grammar (or simple grammar, p.142 Linz), except the s-grammar definition includes a further restriction that any pair \((A, a)\) can occur at most in one rule.

This is so that you wouldn't have to backtrack (only one choice to match the derivation of a string). So it very restrictive.

Theorem 2

For every CFG \(G\) with \(\lambda\) not in \(L(G)\), \(\exists\) a grammar in GNF.

Proof:

1. Rewrite grammar in CNF.2. Relabel Variables \(A_1, A_2, \ldots A_n\)3. Eliminate left recursion and use substitution to get all productions into the form:\(A_i \rightarrow A_jx_j, j > i\)\(Z_i \rightarrow A_jx_j, j \le n\)\(A_i \rightarrow ax_i\)where \(a \in T, x_i \in V*\), and \(Z_i\) are new variables introduced for left recursion.Use Theorems 6.1 and 6.2 to get rid of left recursion.4. All productions with \(A_n\) are in the correct form, \(A_n \rightarrow ax_n\). Use these productions as substitutions to get \(A_{n-1}\) productions in the correct form. Repeat with \(A_{n-2}\), \(A_{n-3}\), etc until all productions are in the correct form.

WHAT YOU SHOULD KNOW: know forms, GNF, CNF, unit production, left recursion, etc. Do not need to memorize rules for transforming, but should understand how to do it.